There's often lots of small - and not so small - activities that communications teams want to carry out online that would make their jobs easier, but aren't really tasks to give to IT teams.

For example, you may wish to update your agency's Facebook and Twitter profile pictures when your logo changes, automatically post your blog posts to LinkedIn and Facebook, be sent an email whenever someone tweets at you or receive an alert whenever your Minister is mentioned in a breaking news story.

This is where it is useful to get familiar with services like IFTTT and Yahoo Pipes.

IFTTT, or "IF This Then That" is a simple logic engine that allow you to string together a trigger and an action to create a 'recipe' using the format IF [trigger] then [action].

For example, below is a recipe used to automatically tweet new posts on this blog:

|

| A recipe in IFTTT |

This sounds very simple, but it can be a very powerful labour saving

tool. Each trigger and action can be from different online services, or

even physical devices.

|

| A recipe in IFTTT (click to enlarge) |

Recipes can be more complex, with various parameters and settings you can configure (for example the recipe above has been configured to append #gov2au to the tweets).

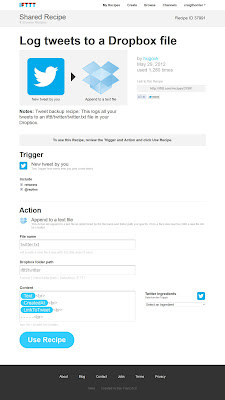

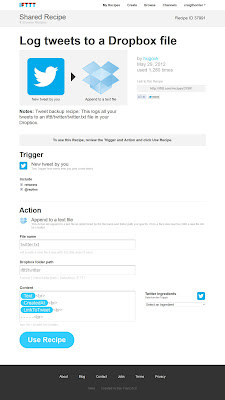

For example, at right is the full page for a recipe that archives your Tweets to a text file in your Dropbox.

Besides connecting the trigger (a new tweet from you) with the action (posting your tweet in Dropbox), you can choose whether to include retweets and @replies.

You can set the file name where your tweets will be stored and the file path in Dropbox, plus you can set the content that is saved and how it will be formated.

In this case the recipe is set to keep the text of the tweet (the 'Text' in a blue box), followed on a new line by the date it was tweeted ('CreatedAt') and then, on another new line, a permanent link to the tweet ('LinkToTweet'), followed by a line break to separate it from following tweets.

You can add additional 'ingredients' such as Tweet name and User Name - essentially whatever information that Twitter shares for each tweet.

Rather than having to invent and test your own recipes, IFTTT allows people to share their recipes with others, meaning you can often find a useful recipe, rather than having to create one from scratch.

In fact I didn't create either of the recipes I've illustrated, they were already listed.

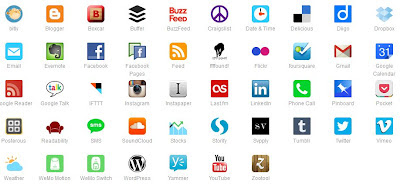

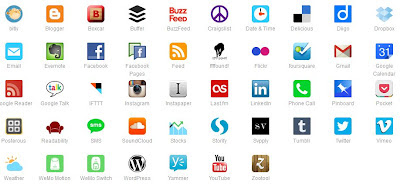

There's currently over 36,000 recipes to choose from, for the 47 services supported - from calendars, to RSS feeds, to email, to social networks, to blogs and video services, from SMS to physical devices.

|

| All the online services that can be 'triggers' for IFTTT |

It is even possible to string together recipes in sequence.

For example, if I wanted to update my profile image in Facebook, Twitter, Blogger and LinkedIn, I can set up a series of recipes such as,

- If [My Facebook profile picture updates] Then [Update my Twitter profile picture to match]

- If [My Twitter profile picture updates] Then [Update my Blogger profile picture to match]

- If [My Blogger profile picture updates] Then [Update my LinkedIn profile picture to match]

- If [My LinkedIn profile picture updates] Then [Update my Facebook profile picture to match]

Using these four recipes, whenever I update one profile picture, they will all update.

Also it's easy to turn recipes on and off - meaning that you can stop them working when necessary (such as if you want to use different profile pictures).

However there's limits to an IF THEN system, which is where a tool like

Yahoo Pipes gets interesting.

Yahoo Pipes is a service used to take inputs, such as an RSS or data feed, webpage, spreadsheet or data from a database, manipulate, filter and combine them with other data and then provide an output with no programming knowledge.

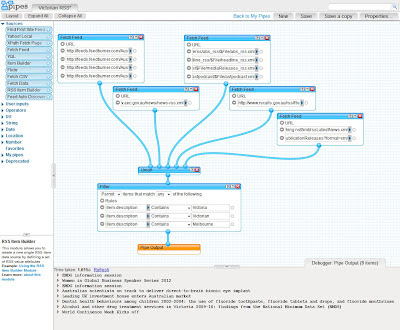

This sounds a bit vague, so here's a basic example - say you wanted to aggregate all news related to Victoria released by Australian Government agencies in media releases.

To do this in Yahoo Pipes you'd fetch RSS feeds from the agencies you were interested in, 'sploosh' them together as a single file, filter out any releases that don't mention 'Victoria', then output what is left as an RSS feed.

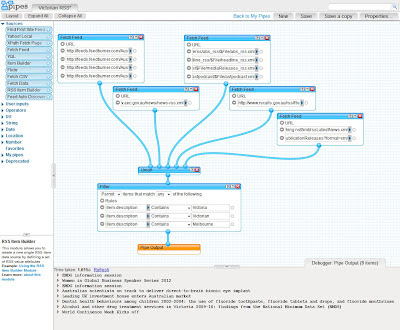

|

| Building a Yahoo Pipe (click to enlarge) |

But that's getting ahead of ourselves a little... To the right is an image depicting how I did this with Yahoo Pipes.

Here's how it works...

First you'll need to go to

pipes.yahoo.com and log in with a Yahoo account.

First I created a set of tools to fetch RSS from Australian Government agencies. These are the top five blue boxes. To create each I simply dragged the Fetch feed from the 'sources'

section of the left-hand menu onto the main part of the screen and then

pasted in each RSS feed URL into the text fields provided (drawing from the

RSS list in Australia.gov.au).

Next, to combine these feeds, I used one of the 'operator' function from the left menu named Union. What this does is it allows you to combine separate functions into a single output file. To combine the Fetch feed RSS feeds all I needed to do was click on the bottom circle under each (their output circle) and drag the blue line to a top circle on the Union box (the input circle).

Then I created a Filter, also an 'operator' function and defined the three conditions I wanted to include in my final output - news items with 'Victoria', 'Victorian' or 'Melbourne'. All others get filtered out. I linked the Filter's input circle to the Union's output circle, then linked the output from the Filter to the Pipe Output.

Then I tested the system worked by clicking on the blue header for each box and viewing their output in the Debugger window at bottom.

Note that pipes don't have to be published, you can keep them private. You can also publish their outputs as RSS feeds or as a web service (using JSON) for input into a different system. You can even get the results as a web badge for your site, by email, phone or as PHP for websites.

|

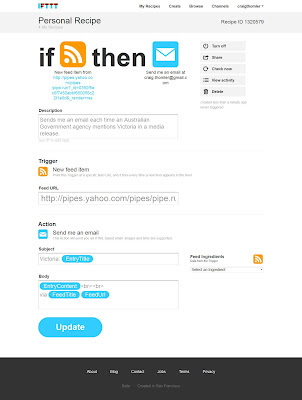

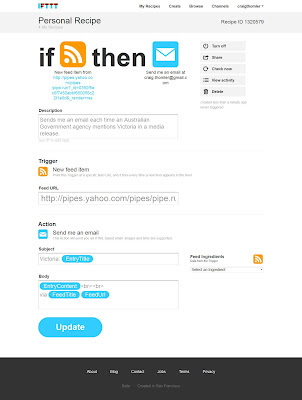

An IFTTT recipe built from the Yahoo Pipe above

(click to enlarge) |

Alternatively you can even combine them with IFTTT - for example creating a recipe that sends you an email every time an Australian Government agency mentions Victoria in an media release.

In fact I created this recipe (in about 30 seconds) to demonstrate how easy it was. You can see it to the right, or go and access it at IFTTT at the recipe link: http://ifttt.com/recipes/43242

So that's how easy it now is to automate actions, or activities, online - with no IT skills, in a short time.

There's lots of simple, and complex, tasks that can be automated easily and quickly with a little creativity and imagination.

You can also go back and modify or turn your recipes and pipes on and off when needed, you can share them with others in your team or across agencies quickly and easily.

Have you a task you'd like to automate?

- Finding mentions of your Department on Twitter or Facebok

- Tracking mentions of your program in the media releases of other agencies

- Archiving all your Tweets and Facebook statuses

- Receiving an SMS alert when the weather forecast is for rain (so you take your umbrella)

- Posting your Facebook updates, Blog posts and media releases automatically on Twitter spread throughout the day (using Buffer)