It's not unusual for agencies to spend tens of thousands of dollars on an online presence to educate people (such as on a new program) - a website that may provide information, eligibility and application processes as well as a couple of social media channels for engagement.

However it is rare for an agency to spend even thousands of dollars on social media monitoring and website analytics to determine whether the website and online presence for a new program is being effective, where improvements could be made, to track its progress and audit it over time.

I've worked in several government agencies which had some kind of research unit, who spent their time analysing customer and program information to provide insights that help improve policies and service delivery. Unfortunately, even in the five and a half years I spent in the public sector, I saw these units cut in responsibilities, reduced in size, even turned into contractor units simply managing external research consultants.

These units were still new to online when I entered the public service, unsure of how to analyse it or how to weigh the insights they might receive. However when I left, although online had been recognised as an important channel, the capability of research units to integrate it into other analysis had been sadly diminished due to budget cuts.

This trend towards outsourcing or simply disregarding data analysis, at a time when society has more data at its fingertips than ever before, is worrying in government. What trends are going unnoticed? What decisions are being made without consideration for the facts?

However I have a special concern around how government agencies regard web and social media analysis, which in my view are increasingly useful sources of near real-time intelligence and longer-term trend data about how people think and behave.

As I've never been able to afford to have a web analytics expert in one of my teams in government, I've spent a great deal of my own time diving into website and social media stats to make sense of why people visited specific government sites, what they were looking for and where they went when they didn't find what they needed.

I've also used third party tools - from Hitwise to Google Trends - to help identify the information and services people needed from government and to help present this information to agency subject matter experts and content owners to help inform their decisions on what information to provide.

I know that some agencies have begun using social media analysis tools to track what people are saying online about their organisation and programs, often to intervene with facts or customer service where relevant, and this is good and important use of online analytics.

I'm even aware of web and social media analytics being provided back to policy areas to help debunk beliefs, much as I used to give different program areas snapshots of their web analytics to help them understand how effective their content was with the audiences they targeted (when I had time).

With the rise in interest in open data, I guess what I'd like to see in government agencies is more awareness of how useful their own web analytics can be to help them to cost-efficiently understand and meet citizen needs. I would also like to see more commitment of resources to online analytics and analysts within agencies to help their subject matter experts to keep improving how they communicate their program, policy or topic to layman citizens.

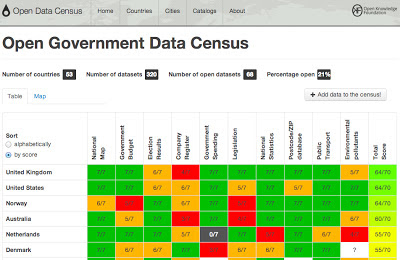

It may also be a good time to look into the intersection of open data and online analytics - open analytics perhaps?

I would love to see agencies publishing their web traffic and social media analytics periodically, or live, allowing government websites to be held accountable in a similar manner to how data on crime statistics helps keep police accountable.

Maybe certain web statistics could even be published as open data feeds, so others might mash-up the traffic across agencies and build a full picture of what the public is seeking from government and where they go to get it. This could even allow a senior Minister, Premier or Prime Minister to have full visibility on the web traffic to an entire state or nation - something that would take months to provide today.

This last suggestion may even overcome the issue agencies have in affording, or for that matter finding, good web analytics people. Instead external developers could be encouraged to uncover the best and worst government sites based on the data and provide a view of what people really want from government in practice, from how they engage with government online.